What is Edge and Edge Computing

According to Wikipedia, Edge Computing is a method of optimizing applications or cloud computing systems by taking some portion of an application, its data, or services away from one or more central nodes (the “core”) to the other logical extreme (the “edge”) of the Internet which makes contact with the physical world or end users [1]. For clarifying the meaning of Edge, I’ll start with this question, why do we need Edge? In order to answer this question, we should know how IoT devices work generally. The following steps show how typical IoT applications work:

-

There are sensors and actuators near the phenomena for measuring and doing lightweight computations.

-

Sensors collect data and send them up to the cloud providers which have IoT services.

-

The logic will happen in the cloud. Logic can be decision making or requesting other services like ML from the cloud provider.

-

The cloud will send the result back to the IoT device.

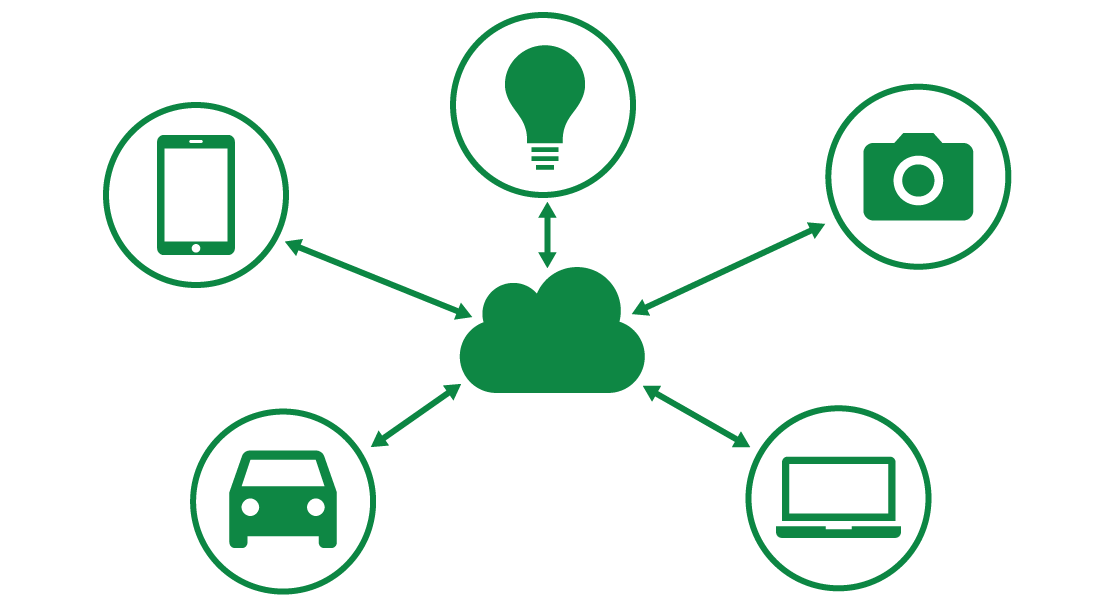

Figure 1 represents the communication between cloud and IoT devices.

In one side, this centralized architecture has some advantages for IoT devices, some of which are:

-

High computational power. IoT devices can have access to unlimited computational power for accomplishing heavy tasks like ML.

-

High Storage Capacity. IoT devices have limited storage capacity, in order to save data for long/short time, they will send data to the cloud.

-

Scalable. There is no limitation for a number of devices.

-

Security. Cloud providers provide high security for data by saving them in different zones.

-

etc.

On the other side, this architecture has some issues as well, some of which are:

-

Data transmission, costs of bandwidth and resources

-

Cloud computation does not satisfy Time-sensitive IoT applications such as smart transportation, healthcare applications, etc.

-

Network traffic

-

Quality of Service issue

-

Data privacy. Third parties may have access to IoT device data.

-

Vendor dependency

-

etc

Moreover, IoT devices often have limited power, therefore, in order to extend the lifetime of these devices, the devices which have enough power, as well as computational resources, should do the computational tasks and send the result to the IoT device with the lowest latency. In order to fulfill these requirements, computations should be done as near as possible to IoT device. In this regard, there should be decentralized computational resources and storage close to the IoT devices in a way that IoT devices can access them easily with the lowest latency. It should be considered that these computational resources are not in the size of cloud resources. By doing that, the aforementioned problems will be solved and the result would be:

-

Big network traffic will lighten.

-

Significant reduction in Latency during data computation and storage for IoT devices.

-

Migration of computational and communication overhead from nodes with limited power resources to edge node with significant power resources.

These decentralized resources called Edges.

Ambiguity in Edge definition

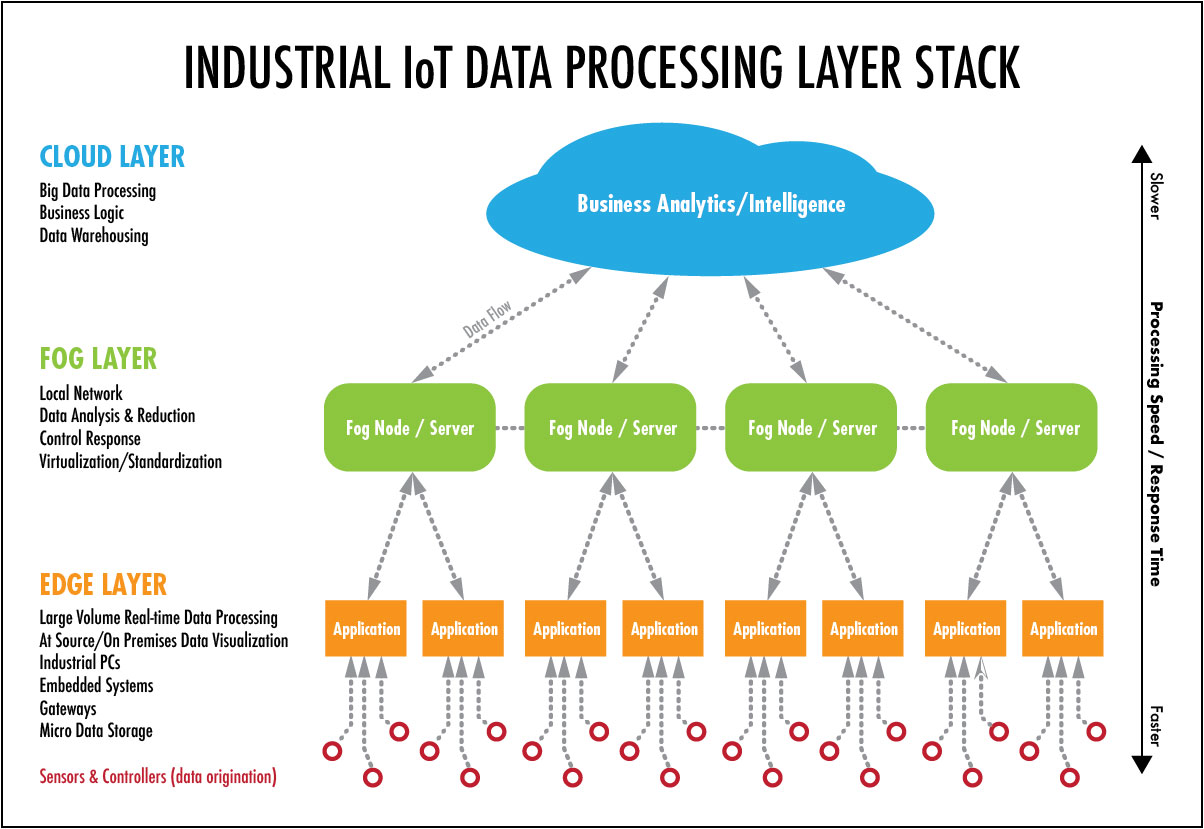

There are different definitions for edge and it has been defined by different companies. Basically, cloud computing is an on-demand delivery of computing, storage, application, and other resources through cloud service providers which is on top of Edge- and Fog-Computing. The word Fog-Computing created by Cisco by aiming to bring the cloud computing capabilities near to IoT device, in order to do that Cisco uses IOx application. Also, AWS has implemented this architecture by using Greengrass. Greengrass is software/agent which provides some cloud service, like Lambda, MQTT broker, etc., for IoT devices which are in the same group. The following picture illustrates the layer stack of computation.

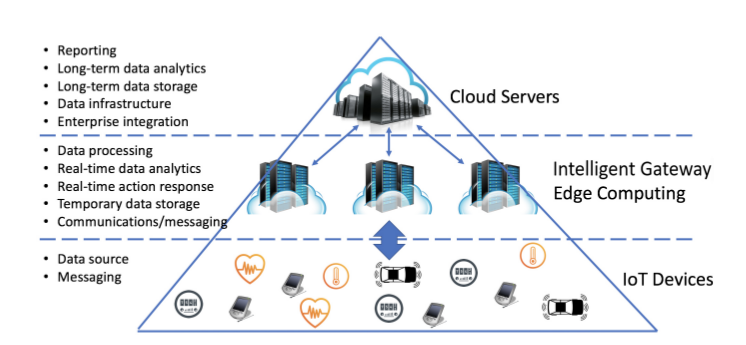

On the other hand, ETSI [4] defines the edge (or Edge of Network) as shown in the following figure.

Conclusion

Accessing different cloud services by IoT devices with the lowest latency is the most important factor which leads to defining Edge.