Self-driving cars and Localization

In this article, I’m going to describe a possible scenario for the precise positioning of autonomous cars in the streets and intersections.

Overview

Before describing the localization of autonomous cars, I want to describe some important components which may be used in the next generation of autos.

Basically, there are two vital elements for driving an auto, Vision and Process, actually, by these two elements, we can control the car. Before tesla’s self-driving cars, driver/human provides these two elements, namely eyes for vision and brain for processing and consequently react in a proper way to control the car. The idea of a self-driving car is basically replacing these two elements with computers and vision sensors.

To achieve this goal, through time, many sensors and the computational unit have been added to autos among which, besides cameras, etc., LiDAR (Light Detection And Ranging) plays an important role. LiDAR can be defined as a remote sensing device which can determine the distance to a target surface with pulsed laser light through measuring the reflected pulses and send them over ethernet to the computers.

To access and process LiDAR data on a fast computer, ROS (Robot Operating System) may be used which is a collection of software frameworks for robot software development. Why it is used in autos? Basically, it provides some advantages which two of them are really useful, firstly, it provides a unified environment by implementing DDS (Data Distribution Service) so that ROS nodes (i.e. sensors or other units) can communicate with each other by publishing and subscribing to a topic and secondly, it provides a very useful feature which called ROSbag. Basically, what ROSbag does is accessing all topics of ROS and provides two important services, recording all published messages to a specific topic with timestamps and providing a service for publishing/playing recorded messages later to that topic. It empowers the auto manufacturers (also in case of an accident, traffic polices) to simulate/debug the process.

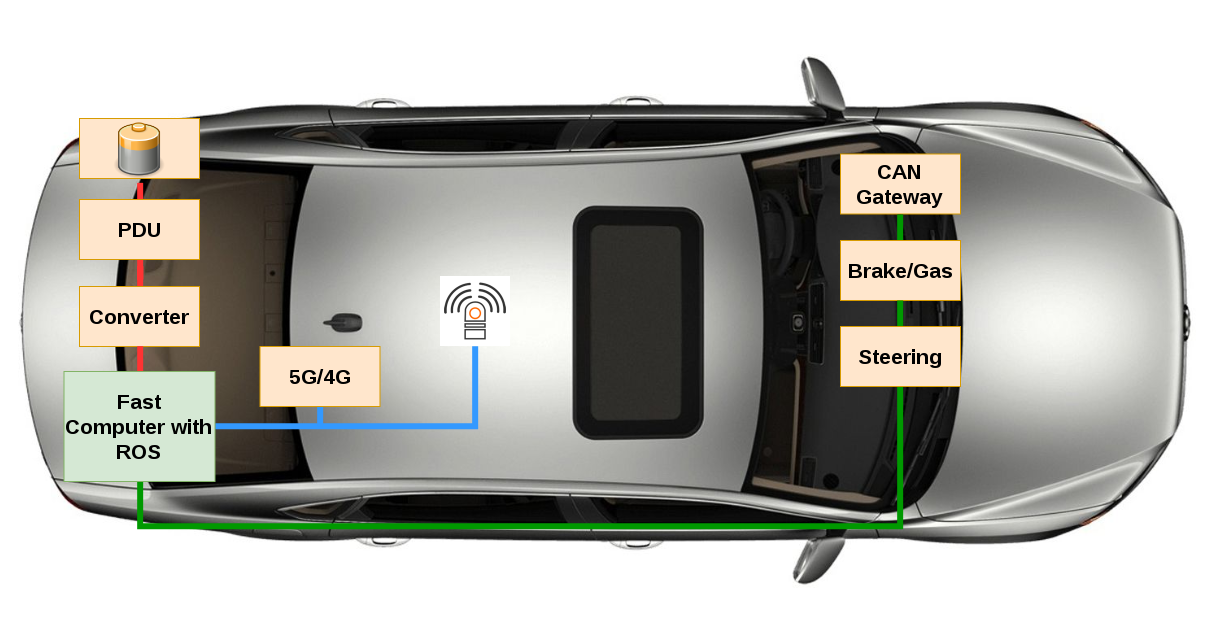

Besides ethernet communication in autos which are going to be popular, there is a CAN-Bus for internal communication between different components of an auto (sensors, actuators, and computational units). Most auto manufacturers expose some methods to enable computational units to call them and consequently the auto can be controlled programmatically [1]. So, the fast computer which will be used for image processing can also send can message to control the vehicle. The following figure represents a simplified version of the internal communication of new autos.

The blue lines are ethernet communication and green one CAN-Bus. As you may know in new cars, besides ECU and CAN-BUS, there would be an Ethernet network, powerful computers, lots of new sensors and actuators, etc. and using these technologies on one hand increase the manufacturing costs, and on the other hand help us developing self-driving cars. With that said, we can jump into possible localization methods for self-driving cars.

Localization

For enabling a vehicle to drive without a human in the streets, one vital aspect would be the exact localization of the vehicle at each moment. Nowadays, devices, as well as people, use some sort of Global Navigation Satellite System (GNSS) for getting their position in the world, although it can provide very accurate position information (in millimeter to sub-centimeter level relative ranking information) [2] and [3], but it can have error whenever the GNSS signal is interrupted, for instance in tunnels, in dense streets surrounded by buildings, heavy vegetations, etc. So, it is not suitable for self-driving cars.

Basically, for localizing vehicles, we need to implement algorithms to estimate the location of the vehicle with an error of less than 10 cm. With the aim of doing that, one possible solution would be enabling the vehicle to localize itself using a highly accurate map together with extracting some features from data come from different sensors inside the vehicle. This approach not only improves the accuracy of the localization in the presence of GNSS (or GPS) but also whenever the GPS signal is not available, it can localize itself using different methods such as the trilateration algorithm. The way to do that roughly divided into three steps which are as follow:

- Feature detection in LiDAR or Camera with the help of computer vision algorithms. These features can be anything in streets such as traffic light, road signs, and marking, trees, historical buildings, etc.

- Find the same feature in the highly accurate map and extract their positions (map matching)

- Use these positions to estimate or improve the vehicle’s position

The more features extracted from sensors data and found in the map at each moment the better will be the localization result of the vehicle.

In most studies, two sensors have been used, LiDAR-based (Light Detection And Ranging) and Vision-based (some sort of cameras). For instance, a camera has been used to detect road marking, such as arrows, and consequently used to localize the vehicle. Although vision-based approaches deliver promising results, they depend on illumination conditions, so in darkness detecting the feature would be harder. On the other hand, LiDAR-based approaches give more accurate results because:

- LiDAR range measurements are accurate

- it does not depend on illumination

- provides a 3D representation of an environment

Researchers have made a 2D view of the LiDAR perception and tried to detect road marking using hough transformation and localize the vehicle in an HD map in lane level. In another approach, they used LiDAR to detect road signs, such as speed limit and tried to improve the vehicle’s position within a third party map.

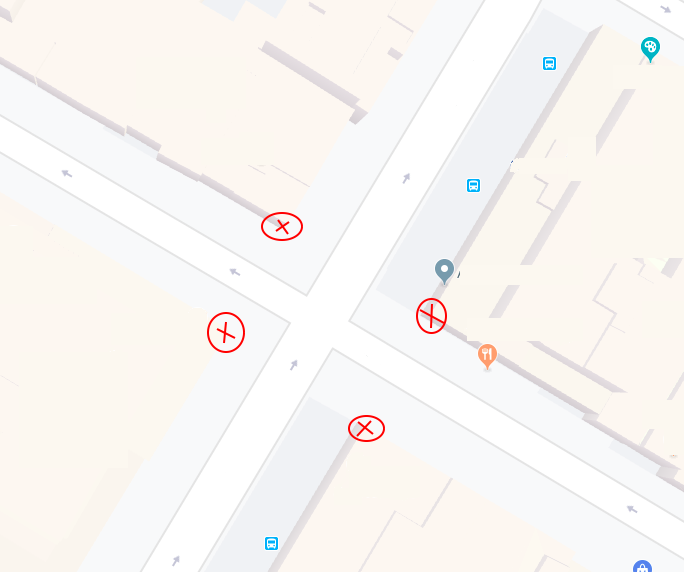

As you may notice, considering/selecting the right features for localizing a vehicle on the road is vital. It is very important that the feature should have constant position for a long time. With that in mind, we can consider buildings as good features because most of them will last for years. So the idea here is to consider the location of their corners in an intersection as shown in Fig. 2.

A vehicle will scan the environment using LiDAR and the result will be published to a topic that will be accessed through an image processing agent. This agent tries to find the corners in each set of data as well as find the matches in the map and in the end-use the location to localize the vehicle.